"The Thalmann model, on the other hand, treats uptake and washout kinetics as dissimilar: While gas uptake is still represented as exponential, the washout is modeled by a function with a linear component. This means that gas washout is treated as a slower process than gas uptake."

Has this been compared to any experimentally measured rates of off-gassing from an aqueous medium, or human body? Couldn't they put people in a chamber (or special rebreather) that accurately measures actual gases coming out of them? You could use nitrogen or helium isotopes?

Regarding the linked

NEDU model-fitting study, if I understand correctly, they back-fit to a pre-existing database that is relatively sparse for the extra-deep dives under consideration. Also, few actual DCS incidences actually occurred under any of the training data (Table 8)?

I am curious what kinds of standard GF values they would have predicted, if they just trained a model specifically on that, alongside this Thalman thing. Why train a model that is

"too computationally expensive to be implemented in a diver-worn decompression computer", when you could train for GFs that do easily work in our computers? Or was that also done? How do you know your new model is better at prediction, versus other models that you could also have fit?

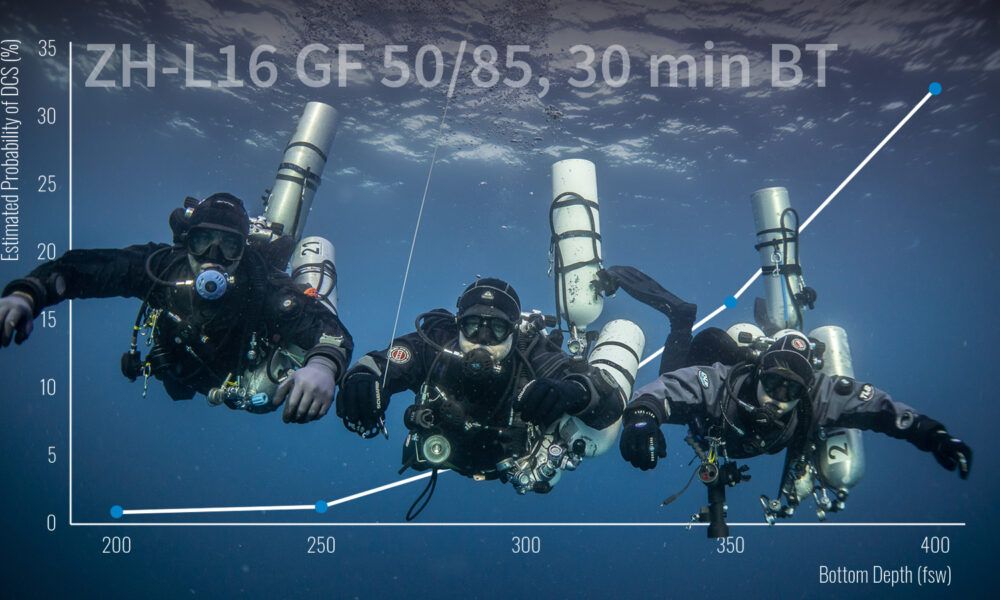

Given data & training constraints, it seems very uncertain what the actual DCS rate (and prediction error) would be for a cohort of

new dives using new fitted models. When just a couple of divers out of 20+ experiences some DCS, it seems very hard to be completely sure it that the only explanation is small differences in deep stops. High uncertainty, other factors not adequately washed out by small

n.