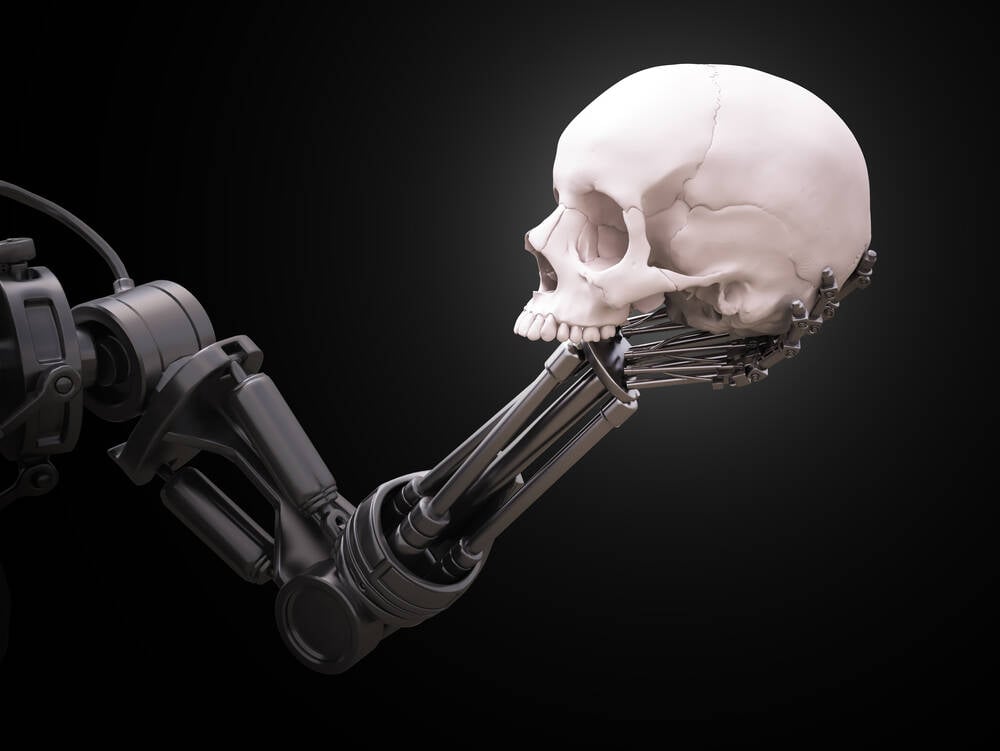

The people building the current models are very careful about the source of the training texts to avoid this problem. They have also manually added in stops to prevent the models from replying to or building content in certain areas.Given the material on the internet that this system draws from, I'm surprised that it isn't a rageaholic freak by now.

There were some earlier experiments that didn't have these boundaries and they quickly went horribly wrong. For example:

In 2016, Microsoft’s Racist Chatbot Revealed the Dangers of Online Conversation

The bot learned language from people on Twitter—but it also learned values

spectrum.ieee.org

spectrum.ieee.org