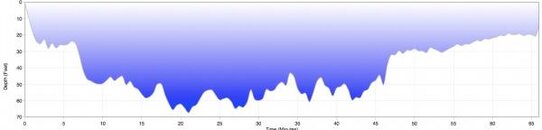

There is a concept in mathematics called curve-fitting. If you look at the OP's first dive, and consider the standard tables, a table would create a plot where the dive went steeply down to 90 feet and stayed there, probably for around 50 minutes, at which time the plot would do an ascent and a 3 minute safety stop (except it wouldn't, because you would be far into deco at that point.). Such a plot fits the dive that was actually done very poorly, and would massively overestimate the nitrogen onloading during the dive.

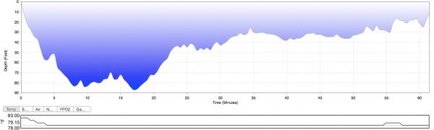

The multi-level dive I created for the eRDPML considered about 20 minutes at 90 feet, and then 30 at 40; it's still a very crude overlay on the dive that was actually done, but a lot closer to it than the first one. This dive was permissible under the rules of the eRDPML, but probably again significantly overestimated the nitrogen uptake during the dive.

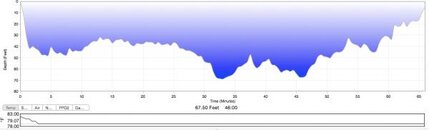

The second dive is really hard to approximate. Obviously, a square plot with a max depth close to 90 in NO way matches what was done. The eRDPML strongly dislikes the fact that the early part of the dive is shallow and the middle part is deep -- it wouldn't even permit me to input that profile without immediately saying it was outside of the parameters. Even when I put the deep part of the dive in the beginning, it did not like the resulting combination -- which again poorly matches the dive that was done. With a one hour surface interval, the program considered the second dive outside of acceptable parameters.

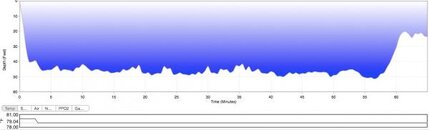

Now, the PADI RDP and the OP's computer algorithm may differ in what they see as acceptable levels of nitrogen loading in various compartments. They may use different numbers of compartments, with different half-lives, and make different assumptions as to the maximum tolerated overpressure gradient in each compartment, and how big a role bubbles play in the gas dynamics. There are LOT of things that are "chosen" about decompression algorithms, and the validity of those choices is argued vigorously among the experts (see the recent thread on deep stops, which has some heavy hitters participating and differing significantly). A device which takes iterative data and does continuous computation is going to estimate the nitrogen loading in the body much more accurately, IF the assumptions made in the algorithm's construction are actually valid. But if method A consistently OVERestimates the nitrogen in comparison to method B, and both draw the line at the same nitrogen loading, then method A will result in a much shorter dive, where the ACTUAL total nitrogen content in the various tissues is much lower than the program thinks it is. If you stay much further away from the acceptable limits, you should, in theory, have a lower risk of DCS.

The problem is that DCS is so rare to begin with, in properly executed recreational dives, that to see anything but an enormous difference between two approaches to computing limits would require many, many dives and a lot of careful data collection. Since it's rate that we dive profiles that are identical, under conditions that are identical, using divers with identical personal risk factors, those data really can't be collected outside of a formal study. And formal studies of this are quite difficult to get through an IRB, because if a certain percentage of the human subjects are expected to be injured by the protocol, it's unlikely to be approved. It's also extremely expensive to do such studies, which is why there are few of them, and they involve small numbers of subjects. And when you have small numbers of subjects, individual factors can become cripplingly important, and destroy the validity of study.